| Version 37 (modified by , 6 years ago) ( diff ) |

|---|

Site Navigation

Getting Started with Alveo FPGA acceleration

Description

Compute servers in COSMOS, and cloud computing nodes in ORBIT Sandbox 9 are equipped with Alveo U200 accelerator cards (with Virtex Ultra Scale+ XCU200-2FSGD2104E FPGA). These cards can be used to accelerate compute-intensive applications such as machine learning, and video processing. They are connected to the Intel Xeon host CPU over PCI Express® (PCIe) Gen3x16 bus.

This tutorial demonstrates how to run an accelerated FPGA kernel on the above mentioned platform. Vitis unified software platform 2019.2 is used for developing and deploying the application.

Prerequisites

In order to access the test bed, create a reservation and have it approved by the reservation service. Access to the resources is granted after the reservation is confirmed. Please follow the process shown on the COSMOS work flow page to get started.

Resources required

1 COSMOS compute server or 1 node in ORBIT SB9. This tutorial uses a compute server on COSMOS bed domain.

Tutorial Setup

Follow the steps below to gain access to the sandbox 1 console and set up the node with appropriate image.

- If you don't have one already, sign up for a COSMOS account

- Create a resource reservation on COSMOS bed.

- Once reservation is approved, login into the console.

- Use OMF commands to load alveo-runtime.ndz image on your resource.

- Make sure the server/node used in the experiment is turned off:

omf tell -a offh -t srv1-lg1

- Load alveo-runtime.ndz on the server/node. This image comes with Vitis 2019.2, XRT(Xilinx Run Time) and Alveo U200 XDMA deployment shell installed.

omf load -i alveo-runtime.ndz -t srv1-lg1

- Once the node is successfully imaged, turn it on and check the status

omf tell -a on -t srv1-lg1

omf stat -t srv1-lg1

- After giving it some time to power up and boot, ssh to the node

ssh root@srv1-lg1

Experiment Execution

Validate Card and check shell installation

- The reserved resource (node in SB9, orbit) has Alveo U200 card attached over PCIe bus. Check if the card is successfully installed and if its firmware matches with the shell installed on the host. Run lspci command as shown below. If the card is successfully installed, two physical functions should be found per card, one for management and one for user.

root@srv1-lg1:~# sudo lspci -vd 10ee: d8:00.0 Processing accelerators: Xilinx Corporation Device d000 Subsystem: Xilinx Corporation Device 000e Flags: bus master, fast devsel, latency 0, IRQ 267, NUMA node 1 Memory at f0000000 (32-bit, non-prefetchable) [size=32M] Memory at f2000000 (32-bit, non-prefetchable) [size=64K] Capabilities: [40] Power Management version 3 Capabilities: [48] MSI: Enable- Count=1/1 Maskable- 64bit+ Capabilities: [70] Express Endpoint, MSI 00 Capabilities: [100] Advanced Error Reporting Capabilities: [1c0] #19 Capabilities: [400] Access Control Services Kernel driver in use: xclmgmt Kernel modules: xclmgmt

- The above output shows only the management function. In that case, the firmware on the FPGA needs to be updated as follows. xilinx_u200_xdma_201830_2 is the deployment shell installed on the alveo-runtime.ndz image. This takes a few minutes to complete.

root@srv1-lg1:~# sudo /opt/xilinx/xrt/bin/xbmgmt flash --update --shell xilinx_u200_xdma_201830_2 Status: shell needs updating Current shell: xilinx_u200_GOLDEN_3 Shell to be flashed: xilinx_u200_xdma_201830_2 Are you sure you wish to proceed? [y/n]: y Updating SC firmware on card[0000:d8:00.0] INFO: found 4 sections ..................................... INFO: Loading new firmware on SC Updating shell on card[0000:d8:00.0] INFO: ***Found 700 ELA Records Idcode byte[0] ff Idcode byte[1] 20 Idcode byte[2] bb Idcode byte[3] 21 Idcode byte[4] 10 Enabled bitstream guard. Bitstream will not be loaded until flashing is finished. Erasing flash................................... Programming flash................................... Cleared bitstream guard. Bitstream now active. Successfully flashed Card[0000:d8:00.0] 1 Card(s) flashed successfully. Cold reboot machine to load the new image on card(s).

- Power cycle the node using omf offh and on commands. Once the node is on, ssh to the node.

- Run lspci command to verify the updated firmware. The output now shows both the management and user functions.

root@srv1-lg1:~# lspci -vd 10ee: d8:00.0 Processing accelerators: Xilinx Corporation Device 5000 Subsystem: Xilinx Corporation Device 000e Flags: bus master, fast devsel, latency 0, NUMA node 1 Memory at 387ff2000000 (64-bit, prefetchable) [size=32M] Memory at 387ff4000000 (64-bit, prefetchable) [size=128K] Capabilities: [40] Power Management version 3 Capabilities: [60] MSI-X: Enable+ Count=33 Masked- Capabilities: [70] Express Endpoint, MSI 00 Capabilities: [100] Advanced Error Reporting Capabilities: [1c0] #19 Capabilities: [400] Access Control Services Capabilities: [410] #15 Kernel driver in use: xclmgmt Kernel modules: xclmgmt d8:00.1 Processing accelerators: Xilinx Corporation Device 5001 Subsystem: Xilinx Corporation Device 000e Flags: bus master, fast devsel, latency 0, IRQ 289, NUMA node 1 Memory at 387ff0000000 (64-bit, prefetchable) [size=32M] Memory at 387ff4020000 (64-bit, prefetchable) [size=64K] Memory at 387fe0000000 (64-bit, prefetchable) [size=256M] Capabilities: [40] Power Management version 3 Capabilities: [60] MSI-X: Enable+ Count=33 Masked- Capabilities: [70] Express Endpoint, MSI 00 Capabilities: [100] Advanced Error Reporting Capabilities: [400] Access Control Services Capabilities: [410] #15 Kernel driver in use: xocl Kernel modules: xocl

- Use the xbmgmt flash —scan command to view and validate the card's current firmware version, as well as display the installed card details

root@srv1-lg1:~# /opt/xilinx/xrt/bin/xbmgmt flash --scan Card [0000:d8:00.0] Card type: u200 Flash type: SPI Flashable partition running on FPGA: xilinx_u200_xdma_201830_2,[ID=0x000000005d1211e8],[SC=4.2.0] Flashable partitions installed in system: xilinx_u200_xdma_201830_2,[ID=0x000000005d1211e8],[SC=4.2.0]

Note the shell version installed on the FPGA(Flashable partition running on FPGA) matches with that installed on the host(Flashable partitions installed in system).

- Use xbutil command to validate the card and the drivers.

root@srv1-lg1:~# /opt/xilinx/xrt/bin/xbutil validate INFO: Found 1 cards INFO: Validating card[0]: xilinx_u200_xdma_201830_2 INFO: == Starting AUX power connector check: INFO: == AUX power connector check PASSED INFO: == Starting PCIE link check: INFO: == PCIE link check PASSED INFO: == Starting verify kernel test: Host buffer alignment 4096 bytes Compiled kernel = /opt/xilinx/dsa/xilinx_u200_xdma_201830_2/test/verify.xclbin Error Exception: argument 1: <type 'exceptions.TypeError'>: Don't know how to convert parameter 1 FAILED TEST ERROR: == verify kernel test FAILED INFO: Card[0] failed to validate. ERROR: Some cards failed to validate.

- In case card validation fails as above, relaod the drivers. Once successfully validated, the card is ready to use.

root@srv1-lg1:~# rmmod xocl root@srv1-lg1:~# rmmod xclmgmt root@srv1-lg1:~# modprobe xocl root@srv1-lg1:~# modprobe xclmgmt root@srv1-lg1:~# /opt/xilinx/xrt/bin/xbutil validate INFO: Found 1 cards INFO: Validating card[0]: xilinx_u200_xdma_201830_2 INFO: == Starting AUX power connector check: INFO: == AUX power connector check PASSED INFO: == Starting PCIE link check: INFO: == PCIE link check PASSED INFO: == Starting verify kernel test: INFO: == verify kernel test PASSED INFO: == Starting DMA test: Buffer Size: 256 MB Host -> PCIe -> FPGA write bandwidth = 8402.31 MB/s Host <- PCIe <- FPGA read bandwidth = 12156.5 MB/s INFO: == DMA test PASSED INFO: == Starting device memory bandwidth test: ........... Maximum throughput: 52428 MB/s INFO: == device memory bandwidth test PASSED INFO: == Starting PCIE peer-to-peer test: P2P BAR is not enabled. Skipping validation INFO: == PCIE peer-to-peer test SKIPPED INFO: == Starting memory-to-memory DMA test: bank0 -> bank1 M2M bandwidth: 11990.1 MB/s bank0 -> bank2 M2M bandwidth: 12025.6 MB/s bank0 -> bank3 M2M bandwidth: 12038 MB/s bank1 -> bank2 M2M bandwidth: 12057.3 MB/s bank1 -> bank3 M2M bandwidth: 12025 MB/s bank2 -> bank3 M2M bandwidth: 12058.4 MB/s INFO: == memory-to-memory DMA test PASSED INFO: Card[0] validated successfully. INFO: All cards validated successfully.

- For more information on card start up, please refer https://www.xilinx.com/support/documentation/boards_and_kits/accelerator-cards/ug1301-getting-started-guide-alveo-accelerator-cards.pdf

Run an accelerated application

- Multiple examples, from a couple of Xilinx repositories have been cloned to the node image. A simple matrix multiplication application obtained from https://github.com/Xilinx/Vitis-Tutorials/blob/master/docs/Pathway3 is demonstrated in this tutorial. The xclbin files for software emulation, hardware emulation and hardware targets were built following instructions in the Vitis-Tutorials repository. Slightly modified host code (to display the output) was also built. Run the accelerated application on the Alveo U200 target using the following command

root@srv1-lg1:~/Vitis-Tutorials/docs/Pathway3/reference-files/run# ./host mmult.hw.xilinx_u200_xdma_201830_2.xclbin Found Platform Platform Name: Xilinx INFO: Reading mmult.hw.xilinx_u200_xdma_201830_2.xclbin Loading: 'mmult.hw.xilinx_u200_xdma_201830_2.xclbin' INPUT MATRIX 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 OUTPUT MATRIX 250 310 230 290 270 330 220 280 230 290 250 310 230 290 270 330 330 406 262 338 254 330 356 432 318 394 330 406 262 338 254 330 250 322 294 366 298 370 312 384 286 358 250 322 294 366 298 370 250 318 306 374 222 290 248 316 334 402 250 318 306 374 222 290 290 374 298 382 366 450 344 428 382 466 290 374 298 382 366 450 250 310 230 290 270 330 220 280 230 290 250 310 230 290 270 330 330 406 262 338 254 330 356 432 318 394 330 406 262 338 254 330 250 322 294 366 298 370 312 384 286 358 250 322 294 366 298 370 250 318 306 374 222 290 248 316 334 402 250 318 306 374 222 290 290 374 298 382 366 450 344 428 382 466 290 374 298 382 366 450 250 310 230 290 270 330 220 280 230 290 250 310 230 290 270 330 330 406 262 338 254 330 356 432 318 394 330 406 262 338 254 330 250 322 294 366 298 370 312 384 286 358 250 322 294 366 298 370 250 318 306 374 222 290 248 316 334 402 250 318 306 374 222 290 290 374 298 382 366 450 344 428 382 466 290 374 298 382 366 450 250 310 230 290 270 330 220 280 230 290 250 310 230 290 270 330 TEST PASSED

- Host code uses OpenCL API calls to send both the input matrices, configure the kernel, start the task and read the output matrix back. Received result is then compared with a result obtained from host computation.

Analyze FPGA kernel performance

- Vitis platform provides options to generate various reports which help understand and analyze the accelerated application. Profile summary, timeline trace, waveform view are a few of them. Vitis analyzer can be used to view these reports.

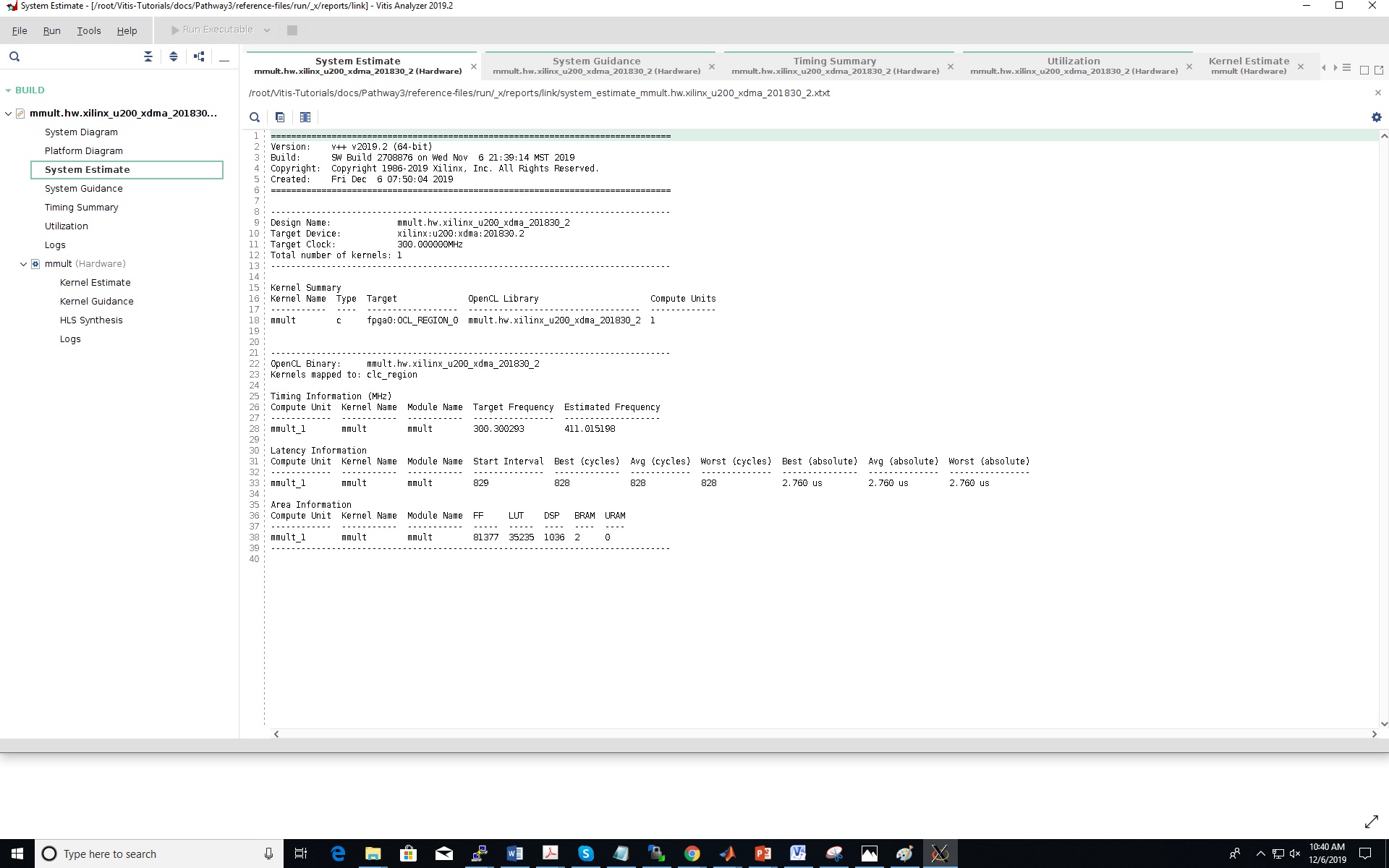

- System estimate report is generated while building the hardware target xclbin file. It gives information on the resource usage, clock frequency, kernel latency estimate, etc.

root@srv1-lg1:~/Vitis-Tutorials/docs/Pathway3/reference-files/run# vitis_analyzer mmult.hw.xilinx_u200_xdma_201830_2.xclbin.link_summary

|

- Waveform view - While generating the hardware emulation target, waveform view can be enabled by editing the xrt.ini file. This option displays kernel signals in a waveform view while running hardware emulation. Hardware emulation can be run as follows

export XCL_EMULATION_MODE=hw_emu root@srv1-lg1:~/Vitis-Tutorials/docs/Pathway3/reference-files/run#./host mmult.hw_emu.xilinx_u200_xdma_201830_2.xclbin

| |

Attachments (2)

- mmult_system_estimate.jpg (322.4 KB ) - added by 6 years ago.

- mmult_hwemu_waveform.JPG (101.3 KB ) - added by 6 years ago.

Download all attachments as: .zip